GPT-Minus1: Enhance GPT Text by Random Word Substitution Tool

GPT-Minus1 enhances text generation models by subtly replacing words with synonyms, improving accuracy and performance. Perfect for refining your GPT outputs!

What is GPT-Minus1?

GPT-Minus1 is a unique tool that modifies text by randomly substituting words with their synonyms. It is intended to improve the efficiency and precision of text generation models by introducing slight variations through this substitution process.

How to use GPT-Minus1?

GPT-Minus1's Core Features

GPT-Minus1 offers these primary features: - Substitution of words with synonyms - Randomization of word replacements - Enhancement of text generation model performance

GPT-Minus1's Use Cases

FAQ from GPT-Minus1

What is GPT-Minus1?

GPT-Minus1 is a tool that modifies text by randomly substituting words with synonyms. It aims to improve text generation models' performance and accuracy through subtle word substitutions.

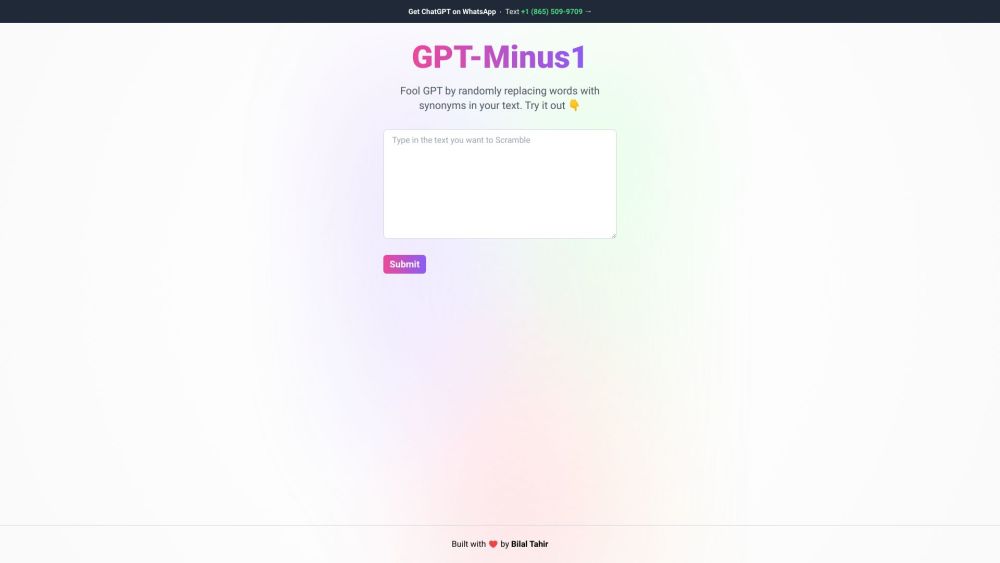

How to use GPT-Minus1?

To use GPT-Minus1, input your text into the tool and click 'Scramble.' The tool will create a modified version of the text by replacing words with their synonyms, which can then be used to test or improve text generation models.

Can I use GPT-Minus1 with any text generation model?

Yes, GPT-Minus1 is compatible with any text generation model, adding word variations to enhance the model's performance.

Does GPT-Minus1 only replace nouns with synonyms?

No, GPT-Minus1 replaces words of any type with synonyms, providing a broad range of text modifications.

Is GPT-Minus1 compatible with multiple languages?

Yes, GPT-Minus1 works with text in multiple languages, provided it has access to an appropriate thesaurus for synonym substitution.

Can GPT-Minus1 perfectly fool text generation models?

While GPT-Minus1 introduces text variations, it might not always completely fool text generation models. The success depends on the specific model's ability to detect and adapt to these changes.