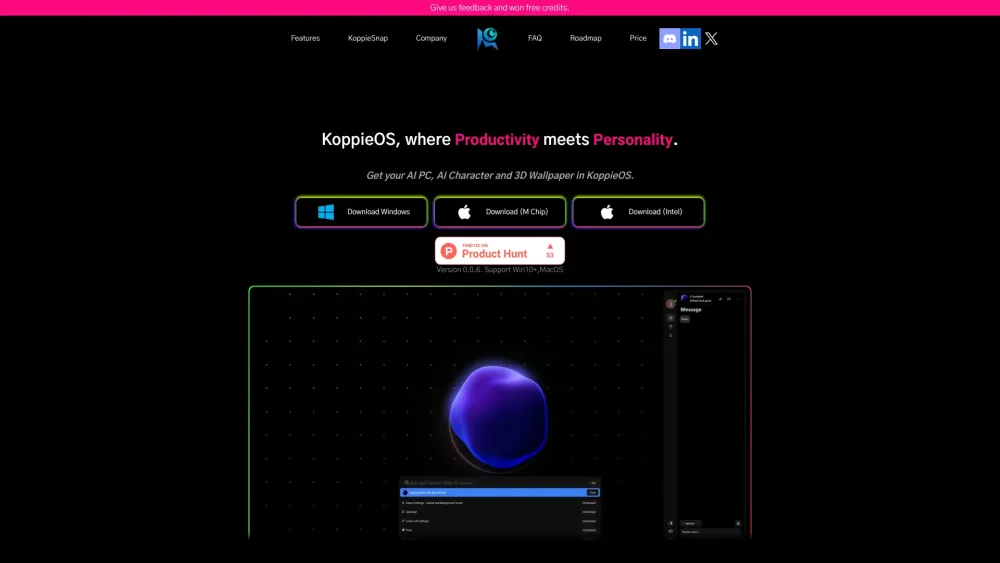

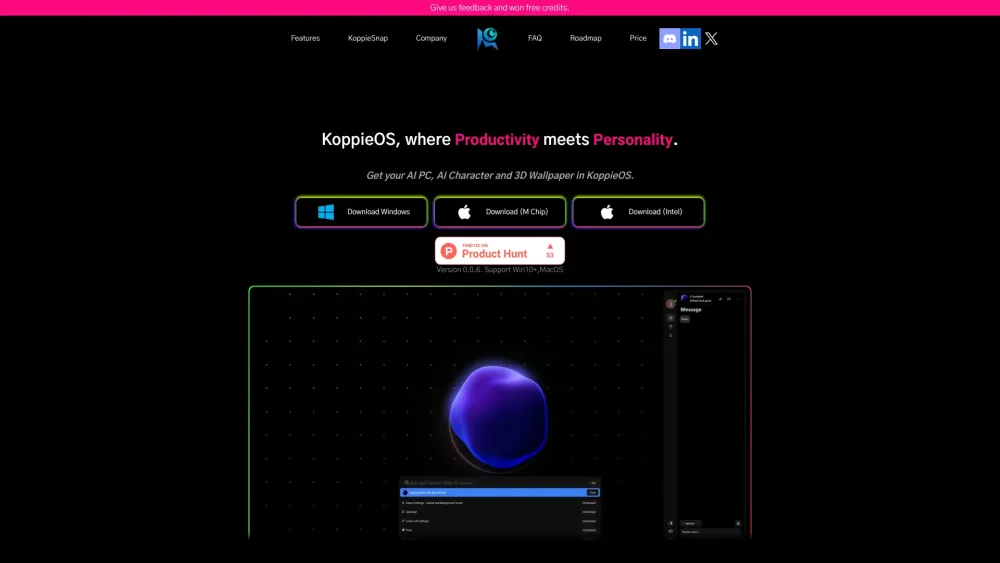

KoppieOS: Seamless GPT-4 Chat & Local LLMs with 3D AI Avatar

Connect Seamlessly with GPT-4 and Local LLMs. Chat with GPT-4 and local LLMs from your personalized desktop space, complete with a 3D AI Avatar and custom wallpapers.

What is KoppieOS?

Experience seamless integration with GPT-4 and local LLMs on your desktop. With KoppieOS, you can chat with advanced AI models and enjoy a personalized environment featuring a 3D AI Avatar and customizable wallpapers.

How to use KoppieOS?

KoppieOS's Core Features

Quick Ask GPT

Save to Note

App Launcher

KoppieOS's Use Cases

Get quick answers from GPT-4

Save important information to notes

Effortlessly launch apps on your computer

-

KoppieOS Discord

Join the KoppieOS community on Discord: https://discord.com/invite/Vxp3Z8wdVZ. For more details, click here(/discord/vxp3z8wdvz).

-

KoppieOS Support Email & Customer Service

Contact KoppieOS support at: [email protected].

-

KoppieOS Company

Company name: Koppie Ai Technology Co., Ltd.

-

KoppieOS Pricing

Check out our pricing details: https://koppieos.koppie.ai/#price.

-

KoppieOS LinkedIn

Follow us on LinkedIn: https://www.linkedin.com/company/koppieai/.

-

KoppieOS Twitter

Stay updated with our Twitter feed: https://twitter.com/ZionHuang761927.

-

KoppieOS GitHub

Download the latest release from our GitHub: https://github.com/KoppieAI/KoppieOS/releases/download/v0.0.6/Koppie_0.0.6.exe.

FAQ from KoppieOS

What is KoppieOS?

KoppieOS offers seamless integration with GPT-4 and local LLMs, allowing users to chat from a personalized desktop space with a 3D AI Avatar and custom wallpapers.

How to use KoppieOS?

Simply download KoppieOS on Windows or Mac, personalize your desktop environment, and start chatting with GPT-4 and local LLMs using features like Quick Ask GPT, Save to Note, Chat Editor, and App Launcher.

Can KoppieOS work on Windows and MacOS?

Yes, KoppieOS is compatible with both Windows and MacOS. Plans for iOS and Android versions are in progress.

Can LLM in KoppieOS search web information?

Yes, all LLMs in KoppieOS can search the web without any extra charge for using GPT-4.

Is KoppieOS an alternative to RayCast AI?

Indeed, KoppieOS provides similar features to RayCast with the added advantage of GPT-4 for enhanced AI interactions.

Is KoppieOS free to use?

KoppieOS is free to use with initial AI credits, which can be increased through referrals and user feedback.

Is KoppieOS an AI copilot?

Yes, KoppieOS functions as an AI copilot for work tasks, while also offering fun features such as new wallpapers and desktop companions.

Can KoppieOS run LLM locally?

Yes, local LLM support will soon be available, ensuring privacy by not transmitting personal data to servers during LLM operations.