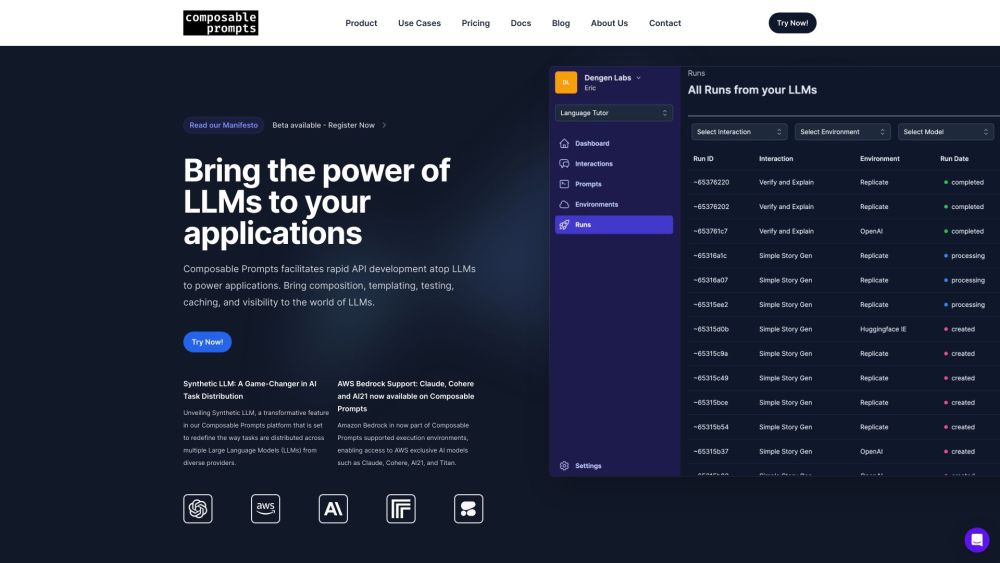

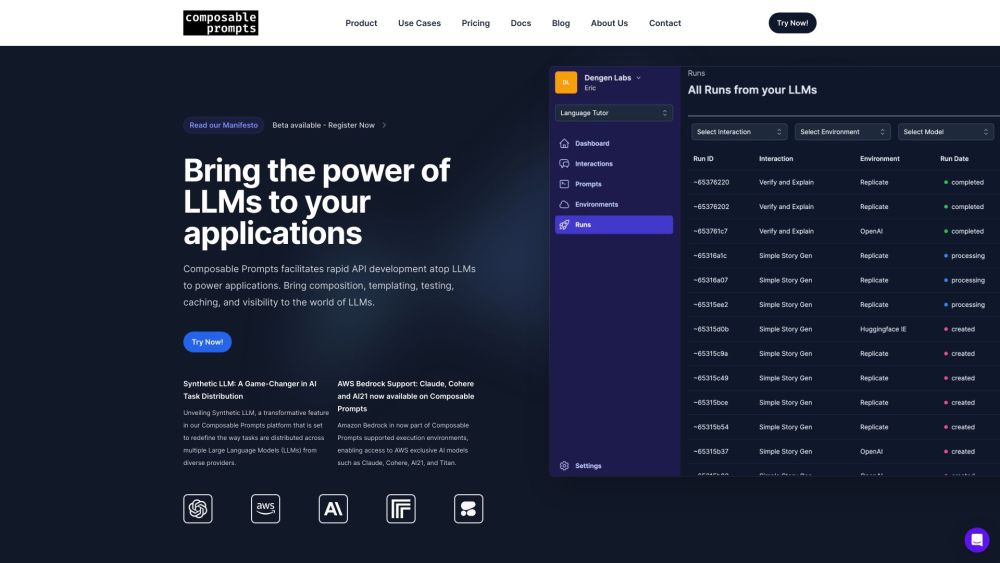

Composable Prompts: Craft, Test & Deploy LLM Tasks & APIs Efficiently

Composable Prompts: Premier platform for crafting, testing, and deploying LLM tasks and APIs with advanced composition, templating, and caching features.

What is Composable Prompts?

Composable Prompts is the leading platform designed for the efficient crafting, testing, and deployment of tasks and APIs utilizing Large Language Models (LLMs). It offers a comprehensive suite of tools for composition, templating, testing, caching, and enhancing visibility into LLM operations.

How to use Composable Prompts?

Composable Prompts's Core Features

Craft sophisticated prompts with schema validation

Reuse and test prompts across different applications

Utilize multiple models and environments

Enhance performance with intelligent caching

Monitor and debug prompt executions

Switch between models and runtime environments seamlessly

Integrate easily with API, SDK, and CLI

Support content-intensive applications and workflows

Composable Prompts's Use Cases

Ad optimization

Content compliance

Email personalization

Dynamic content creation for education & learning

Adaptive questioning for educational purposes

Explorative learning with LLMs

Automated ticket categorization in customer support

Real-time information enhancement for customer support

Composable Prompts Support Email & Customer Service Contact

For more contact information, visit the contact us page.

Composable Prompts Company

Composable Prompts operates under the name Composable Prompts SAS.

To learn more about Composable Prompts, visit the about us page.

Composable Prompts Pricing

For pricing details, visit our pricing page.

Composable Prompts LinkedIn

Follow us on LinkedIn at Composable Prompts LinkedIn.

Composable Prompts Twitter

Stay updated by following us on Twitter at Composable Prompts Twitter.

Composable Prompts GitHub

Explore our projects on GitHub at Composable Prompts GitHub.

FAQ from Composable Prompts

What is Composable Prompts?

Composable Prompts is the premier platform for crafting, testing, and deploying tasks and APIs powered by Large Language Models (LLMs). It brings composition, templating, testing, caching, and visibility to the world of LLMs.

How to use Composable Prompts?

With Composable Prompts, you can rapidly develop APIs atop LLMs to power your applications. It allows you to compose powerful prompts, reuse them across your applications, and test them in different environments. You can also optimize performance and cost using intelligent caching and easily switch between models and runtime environments.

How can I use Composable Prompts?

You can use Composable Prompts to develop APIs atop LLMs for your applications. It allows you to compose powerful prompts, reuse them across applications, and test them in different environments.

What are the core features of Composable Prompts?

The core features of Composable Prompts include composing powerful prompts with schema validation, reusing and testing prompts across applications, leveraging multiple models and environments, optimizing performance with intelligent caching, monitoring and debugging prompts' execution, and easily integrating with API, SDK, and CLI.

What are the use cases of Composable Prompts?

Composable Prompts can be used for various use cases such as ad optimization, content compliance, email personalization, dynamic content generation for education & learning, adaptive questioning for education & learning, explorative learning with LLMs, automated ticket categorization for customer support, and real-time information augmentation for customer support.

Does Composable Prompts support pricing plans?

Composable Prompts does not currently offer any pricing plans. Please contact us for more information.