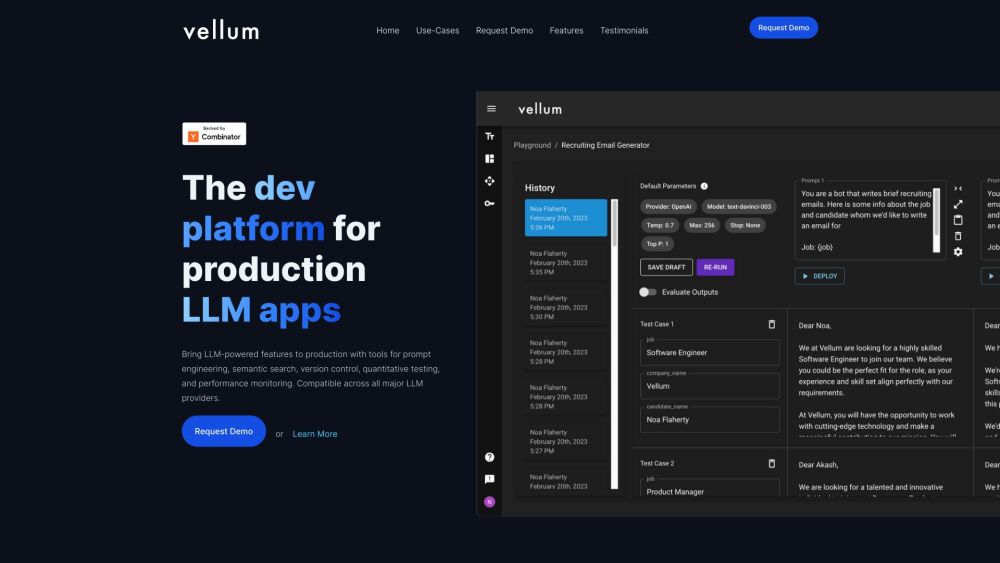

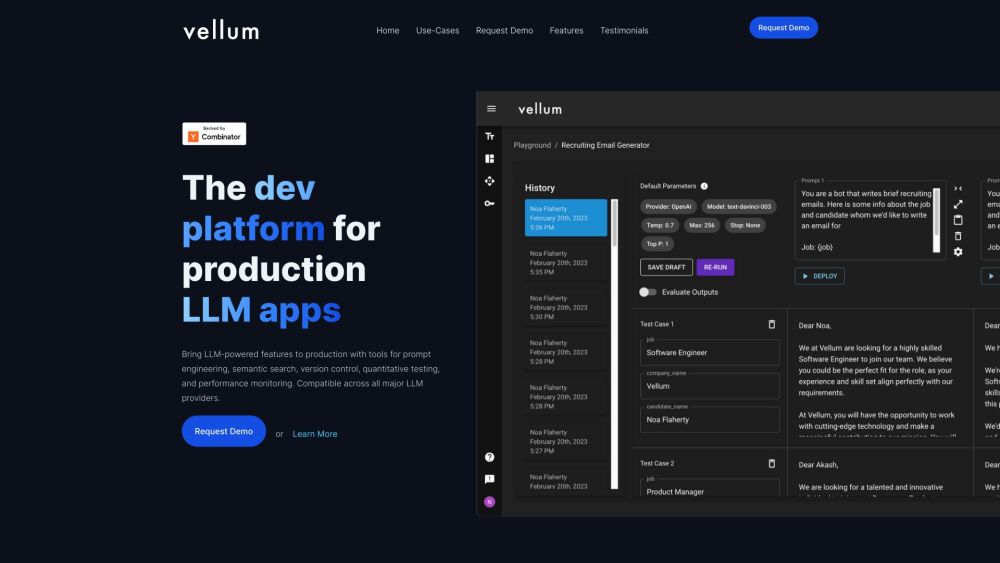

Vellum: LLM App Development, Semantic Search, Version Control : Complete LLM App Development Platform

Vellum: The ultimate platform for LLM app development with prompt engineering, semantic search, version control, testing, and monitoring. Compatible with top LLMs.

What is Vellum?

Vellum is a robust development platform tailored for building applications powered by large language models (LLMs). It provides essential tools for prompt engineering, semantic search, version control, testing, and monitoring. Vellum is compatible with all leading LLM providers.

How to use Vellum?

Vellum's Core Features

Prompt Engineering

Semantic Search

Version Control

Testing

Monitoring

Vellum's Use Cases

Workflow Automation

Document Analysis

Copilots

Fine-tuning

Q&A over Docs

Intent Classification

Summarization

Vector Search

LLM Monitoring

Chatbots

Semantic Search

LLM Evaluation

Sentiment Analysis

Custom LLM Evaluation

AI Chatbots

Vellum Discord

Join the Vellum community on Discord: https://discord.gg/6NqSBUxF78. For more details, click here(/discord/6nqsbuxf78).

Vellum Support Email & Customer Service

For customer support, contact Vellum at: [email protected]. More contact information is available on our contact page(https://www.vellum.ai/landing-pages/talk-to-sales).

About Vellum

Company name: Vellum AI

Vellum on LinkedIn

Follow us on LinkedIn: https://www.linkedin.com/company/vellumai/

FAQ from Vellum

What is Vellum?

Vellum is a platform designed for the development of LLM applications, offering tools for prompt engineering, semantic search, version control, testing, and monitoring. It is compatible with all major LLM providers.

How to use Vellum?

Vellum provides extensive features for prompt engineering, semantic search, version control, testing, and monitoring. It helps users develop LLM-powered applications and transition these features into production. The platform facilitates rapid experimentation, regression testing, version control, and monitoring. It also supports the use of proprietary data in LLM calls, collaboration on prompts and models, and comprehensive tracking of LLM changes in production. Vellum's UI is designed for ease of use.

What can Vellum help me build?

Vellum enables the development and production deployment of LLM-powered applications by offering tools for prompt engineering, semantic search, version control, testing, and monitoring.

Which LLM providers are compatible with Vellum?

Vellum is compatible with all major LLM providers.

What are the key features of Vellum?

The main features of Vellum include prompt engineering, semantic search, version control, testing, and monitoring.

Can Vellum be used for prompt and model collaboration?

Yes, Vellum supports comparison, testing, and collaboration on prompts and models.

Does Vellum include version control?

Yes, Vellum includes version control to track changes and developments.

Can I use my own data in LLM calls with Vellum?

Yes, Vellum allows the integration of proprietary data in LLM calls.

Is Vellum compatible with various providers?

Yes, Vellum is provider agnostic, enabling the use of different providers and models as needed.

Can I get a personalized demo of Vellum?

Yes, you can request a personalized demo from the Vellum team.

What do users say about Vellum?

Users appreciate Vellum for its intuitive interface, quick deployment, comprehensive prompt testing, collaborative features, and the ability to compare different model providers.