Token Counter for OpenAI Models: Track Tokens, Manage Costs Effectively

Token Counter for OpenAI Models: Effortlessly track tokens, stay within limits, and manage costs efficiently. Optimize your usage today!

Understanding Token Counter for OpenAI Models

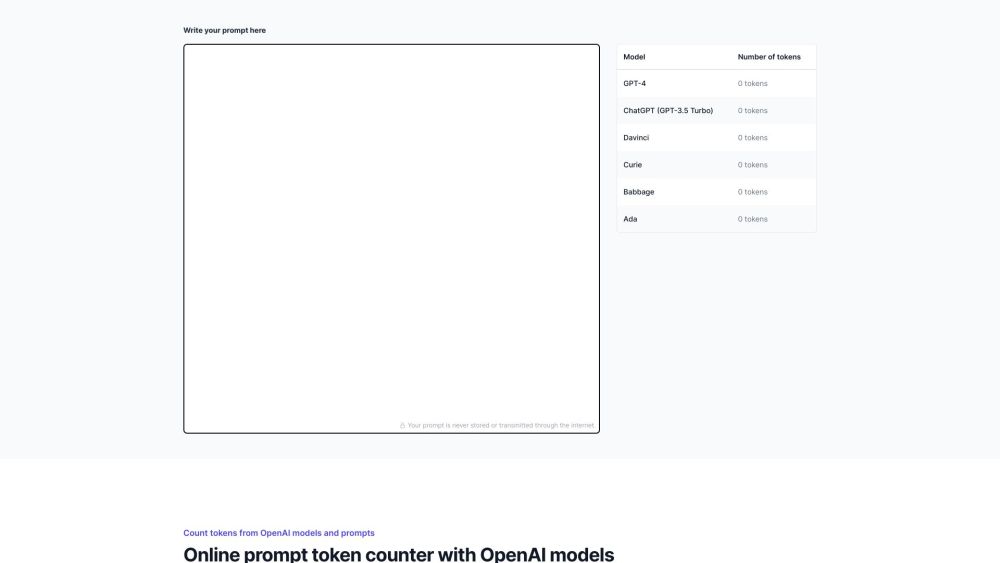

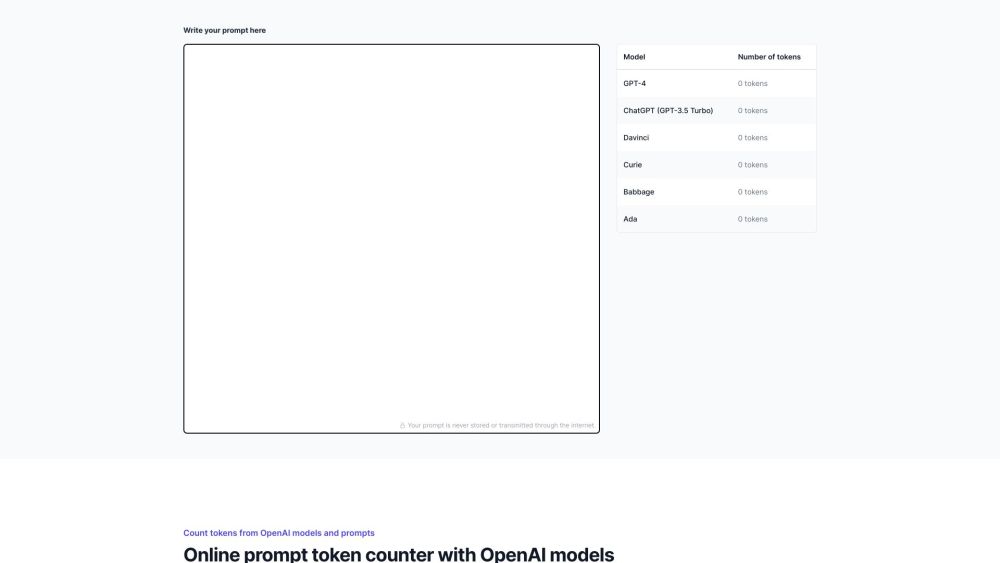

The Token Counter for OpenAI Models is a practical online tool designed to help you keep track of token usage in OpenAI models and prompts. By accurately counting tokens, it ensures that you remain within the model's token limits, enabling effective cost management.

Steps to Use Token Counter for OpenAI Models

Key Features of Token Counter for OpenAI Models

The main features of the Token Counter for OpenAI Models include: - Counting tokens for both OpenAI models and prompts - Ensuring prompts adhere to the model's token restrictions - Monitoring token usage to manage costs efficiently - Aiding in response token management - Promoting clear and concise communication through optimized prompts

Applications of Token Counter for OpenAI Models

The Token Counter for OpenAI Models is useful in various scenarios, such as: - Drafting emails or documents using OpenAI models - Generating answers to questions or queries - Creating conversational prompts for chatbots - Writing creative stories or prompts

-

Token Counter for OpenAI Models Company

The Token Counter for OpenAI Models is developed by Borah Agency.

FAQs About Token Counter for OpenAI Models

What is Token Counter for OpenAI Models?

The Token Counter for OpenAI Models is an online tool that helps you track token usage in OpenAI models and prompts, ensuring you stay within the token limits and manage costs effectively.

How do I use the Token Counter for OpenAI Models?

To use the Token Counter for OpenAI Models, follow these steps: 1. Know the token limits of the OpenAI model you're working with. 2. Preprocess your prompt just as you would in actual interactions. 3. Count the tokens in your prompt, including all elements like words, punctuation, and spaces. 4. Include the model's response tokens in your count and modify the prompt if necessary. 5. Iterate and refine your prompt until it fits within the allowed token count.