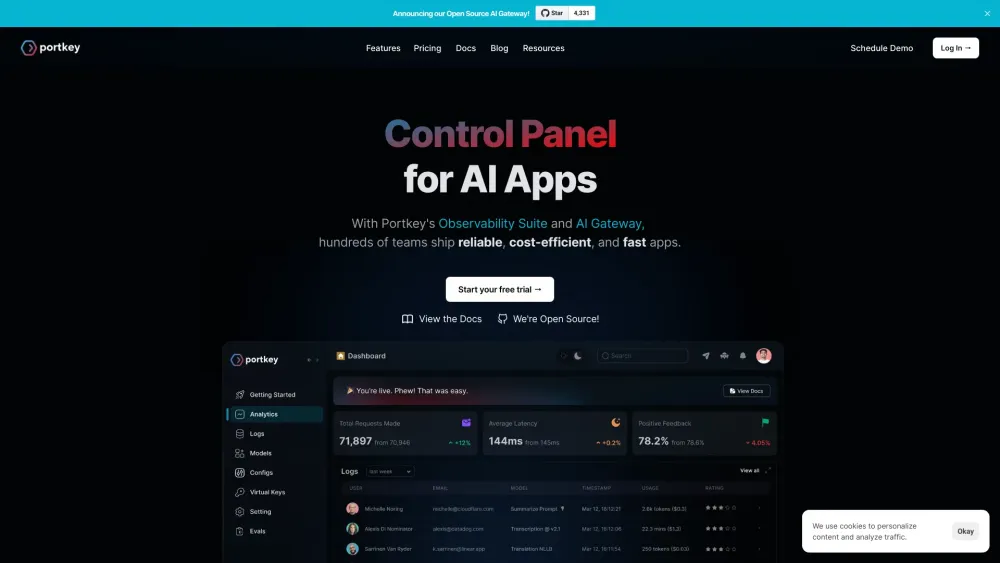

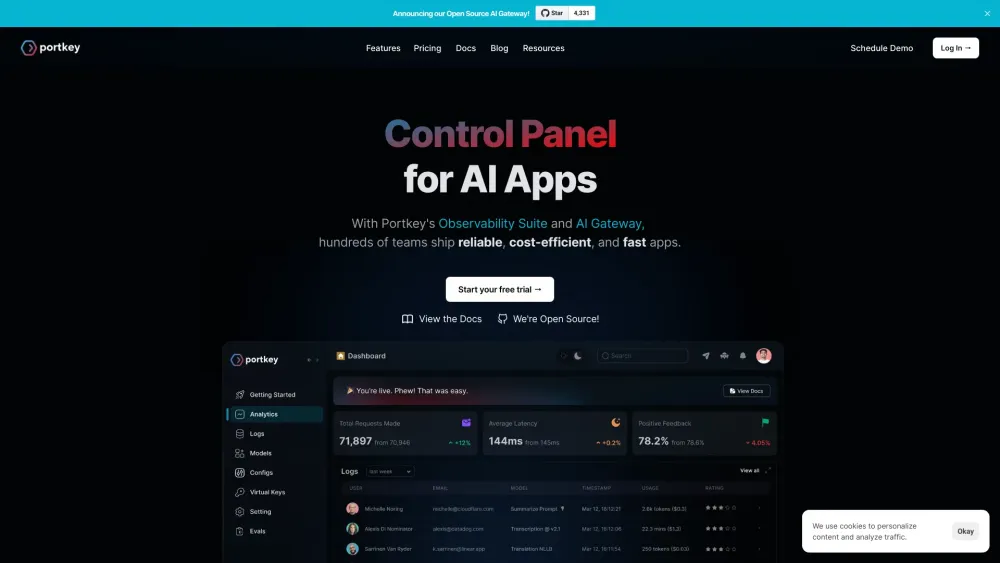

Portkey.ai: Develop, Launch & Maintain Generative AI Apps Faster

Portkey.ai: Accelerate AI app development & maintenance with our LLMOps platform. Develop, launch, and iterate your generative AI apps faster than ever!

What is Portkey.ai - Control Panel for AI Apps?

Portkey.ai empowers businesses to develop, launch, and manage their generative AI applications more efficiently. This LLMOps platform accelerates both the development process and the performance of your AI-powered applications.

How to use Portkey.ai - Control Panel for AI Apps?

Portkey.ai - Control Panel for AI Apps's Core Features

Observability Suite

AI Gateway

Prompt Playground

Portkey.ai - Control Panel for AI Apps's Use Cases

Monitor costs, quality, and latency

Route to 100+ LLMs, reliably

Build and deploy effective prompts

FAQ from Portkey.ai - Control Panel for AI Apps

What is Portkey.ai - Control Panel for AI Apps?

Portkey.ai enables businesses to develop, launch, and manage generative AI applications more efficiently. It is an LLMOps platform designed to streamline development and enhance app performance.

How to use Portkey.ai - Control Panel for AI Apps?

Integrate Portkey by replacing the OpenAI API base path in your app with Portkey's API endpoint. This centralizes management of prompts and parameters.

How does Portkey work?

By replacing the OpenAI API base path in your app with Portkey's endpoint, Portkey routes all your requests to OpenAI, giving you comprehensive control. You can manage prompts and parameters in one place to add extra value.

How do you store my data?

Portkey is ISO:27001 and SOC 2 certified and GDPR compliant. We follow best practices for security, data storage, and retrieval, with all data encrypted in transit and at rest. For enterprises, we offer managed hosting for private cloud deployment.

Will this slow down my app?

No, we ensure minimal latency through smart caching, automatic fail-over, and edge compute layers, potentially improving your app's performance overall.