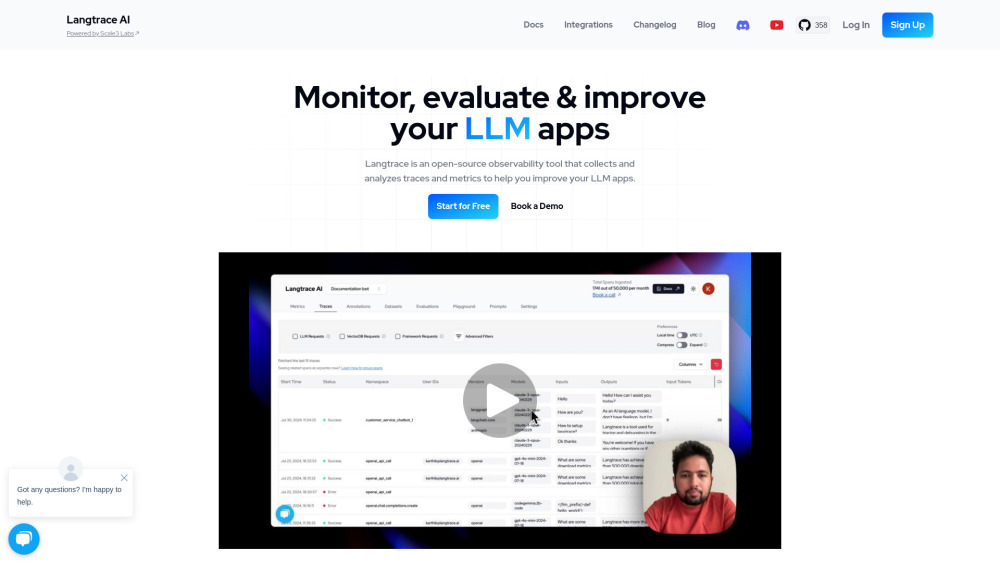

Langtrace.ai: OpenSource LLM App Observability & Evaluation Platform

Langtrace.ai: The OpenSource platform for LLM observability and evaluation, optimizing your AI application's performance and insights effortlessly.

Understanding Langtrace.ai

Langtrace.ai is an open-source platform designed to enhance the observability and evaluation of your applications powered by large language models (LLMs). It simplifies the process by automatically creating OpenTelemetry-compatible traces that capture crucial data such as prompts, completions, token usage, costs, model hyperparameters, and latency metrics. With Langtrace.ai, you can integrate these capabilities with just a couple of lines of code.

Getting Started with Langtrace.ai

Key Features of Langtrace.ai

Comprehensive Tracing

In-Depth Evaluations

Efficient Prompt Management

Dataset Integration

Applications of Langtrace.ai

LLM Operations

Machine Learning Operations

-

Langtrace.ai Support and Contact Information

For customer service inquiries, you can reach out to Langtrace.ai support at: [email protected].

-

About Langtrace.ai Company

Langtrace.ai is developed by Scale3 Labs, a company dedicated to creating innovative tools for modern software development.

-

Access Langtrace.ai

Login to Langtrace.ai: https://www.langtrace.ai/login

Sign up for Langtrace.ai: https://www.langtrace.ai/signup

-

Follow Langtrace.ai Online

You can follow Langtrace.ai on YouTube: https://www.youtube.com/@Langtrace

Connect on LinkedIn: https://www.linkedin.com/company/scale3labs

Follow on Twitter: https://twitter.com/langtrace_ai

Explore on GitHub: https://github.com/Scale3-Labs/langtrace

Frequently Asked Questions about Langtrace.ai

What is Langtrace.ai?

Langtrace.ai is a powerful open-source platform that allows you to monitor, trace, and evaluate the performance of your applications powered by large language models. It provides detailed metrics and insights through OpenTelemetry-compatible traces, making it an essential tool for developers working with LLMs.

How do I use Langtrace.ai?

To get started, install the Langtrace SDK for Python or TypeScript, generate your API key, and initialize the SDK using this key. This simple setup enables you to begin tracking and improving your application's performance right away.